Private AI Services—take control of your agents

What follows is yet another comparison of AI services that will be out-of-date in a matter of months. Nevertheless, the good actors will hopefully continue to develop their services in the right direction.

TL;DR

Proton’s Lumo is fully encrypted and Duck.ai prevents profiling; Mistral and others can be used relatively safely for non-sensitive data, if one is careful to opt-out of data sharing, but nothing beats local AI for privacy.

What is “Private AI”?

Privacy in an AI context is not that different from privacy in any other context—you, the end-user, should maintain authority on your information, and be able to decide what information you share about yourself and what you keep private. At its core, this is a value statement that enables personal autonomy and human dignity. Practically speaking, AI privacy prevents your information from being leaked and, in turn, safeguards you from manipulative advertisement and business practices.

Private AI embodies several essential characteristics

- No training on user data

Training data of AI has been proven to be at least partially recoverable from the resulting LLMs, so anything used for AI training can leak to the public internet. With many providers, this includes all your chat content. - No profiling or sharing of user data

The AI should not create a profile or data collection of you that the company then sells to advertisers, insurers and other instances that use it for their own profit. - User data controls

You should maintain authority over your data, and be able to control its sharing and deletion. Preferably hard security through encryption, control can also be implemented through contractual constraints. - No advertising

While currently not an issue, I believe this one will become very important, as purchase services and product placement will be increasingly integrated to AI agents. The AI should not try to nudge you towards making purchases, be it with explicit advertisements or manipulation.

User data includes data accessed through integrations

Privacy-conscious AI services should also strive to keep any data connected to the AI private. In the age of AI agents, this may include your personal emails, calendar information, content of documents you work on and websites you visit.

With data connected to an AI agent, the LLM is allowed to query the data through some kind of filtering. Even with prompt safeguards in place, it is possible to circumvent guardrails and user control through malicious prompt engineering or hijacking attacks, so any system integrated to an AI should be considered to be shared to all aspects of that AI. An agent that browses a website could potentially upload your whole CRM without your knowledge.

Why is AI privacy important

Ensittification is a term coined by Cory Doctorow to describe the cyclical process of platforms decaying in quality. Platforms often start by offering a good service to end-users to attract users. Through investor money, services are fast, free and good in quality. Once a user base is established, service platforms often pivot towards extracting value from its users to its shareholders—prices hike up, ads pop up, algorithms get worse at serving the users, AI slop and brain rot rain supreme.

While platform decay is bad in general, the enshittification of AI agents presents a more severe threat due to the way AI closely interact with people and even take actions on our behalf. AI agents both gather much more information on us than more traditional platforms and are able to influence and manipulate us to a much greater degree than scrolling feeds and search results.

While your browser history tells a lot about you, your chat history tells an even more complete story—in addition to whatever peaks your interest at any given time, it will include rich data on your reactions, your emotional state and perhaps even deeper understanding on your psychological history. The rich profiling data accumulates faster depending on whether you use AI as a search engine, a secretary, an assistant, a life coach, a boyfriend or even a therapist, but even a simple voice control can leak your emotional state and be used to sell you chocolate.

Traditional SEO (Search Engine Optimization) is already taking a backseat to AI Optimization, where social media polls and other easily indexable content is used to make AI recommend certain products over others. As purchase systems are increasingly integrated to AI agents, both explicit product placements/advertisements and implicit nudging will increase in number. It is easy to instruct an AI to not explicitly instruct the user to make a purchase, but start to refer to their phone model as out-dated or highlight the virtues of “much better” models.

Private(ish) Online AI Services

No online service is 100% private, as data is still transmitted outside your personally owned hardware. End-to-end encrypted (E2EE, encrypted in transit) services can be almost completely private, when designed correctly1, and data is also secured at-rest.

Even when physically shared when transmitted to AI providers, data can be (partially) secured from leaks through contractual means, though government agencies and bad actors may still gain access.

The services listed here make an attempt to safeguard your privacy with different levels of security.

Lumo from Proton: Zero access encryption

Lumo is perhaps the most secure online AI service currently available. It is created by the makers of Protonmail, and comes very secure out-of-the-box. Nobody should be able to read your chats but you.

- Zero-access encryption (encrypted end-to-end and at-rest)

The only service I know that claims to provide a service where they themselves do not have any access to user data. - No logs, user data never shared

The default data sharing practices of Lumo are very stringent and good. No data is shared with anyone, used to train AI or logged. - Local servers in Switzerland (EU)

While this limits the selection of LLM models to open-source, it is the only way to provide a truly confidential AI service. A high-privacy jurisdiction is good even for US-based actors. - Auditable Open Source

The code for Lumo is fully open source, which means that it can be audited, even if you won’t make use of it personally.

Limited Free Service, Lumo Plus 12,99 €/m or 119,88 €/y

Plus is needed for unlimited chats, extended chat history and multiple large file uploads.

Duck.ai from DuckDuckGo: Quick and easy

DuckDuckGo’s AI service works as an anonymizing proxy (middleman) between the user and AI service providers. The easy and short url is quick to use on shared computers (though do remember to clear chat history), and contractual protections should be enough for most use-cases. Chat data is still sent to multiple companies for processing, so it’s not good enough for sensitive data.

- Privacy through proxy and anonymization

Routes queries to high-end models (e.g. GPT-5, Claude) but shields identifiable information and bulks requests together with other users

(IP addresses and other identifiers not forwarded) - Contractual safeguarding of user data

Data shared with AI providers; Claims strict contracts with model providers to prevent data usage as per Privacy Terms

(data not used for training, deleted within 30 days) - Chats stored locally in-browser

No login needed! No data stored (though still readable by AI providers in transit) - US-based

Liability for some, a pro for others

Limited Free Service, Subscription 9,99 €/m, 99,99 €/y (includes VPN)

Subscription needed for advanced models.

Kagi Assistant: Search first

Kagi Assistant pairs up with Kagi search engine, and provides a middleman service similar to Duck.ai. If you’re sold on their customizable search engine, the AI proxy is a nice addition.

- Privacy through proxy and anonymization

Routes queries to high-end models (e.g. GPT-5, Gemini2.5, Grok4) but shields identifiable information and bulks requests together with other users

(IP addresses and other identifiers not forwarded) - Contractual safeguarding of user data

Data shared with AI providers; Claims strict contracts with model providers to prevent data usage

(data not used for training, chats deleted after 24 hours by default) - US-based

Liability for some, a pro for others

Premium service starting at $5/m (includes Kagi Search)

Mistral in France: Advanced capabilities in EU

For advanced functionality with a privacy-conscious provider, look to Mistral. Remember to ensure that data sharing is disabled in Privacy settings, after which you can be quite sure that your data is safe.

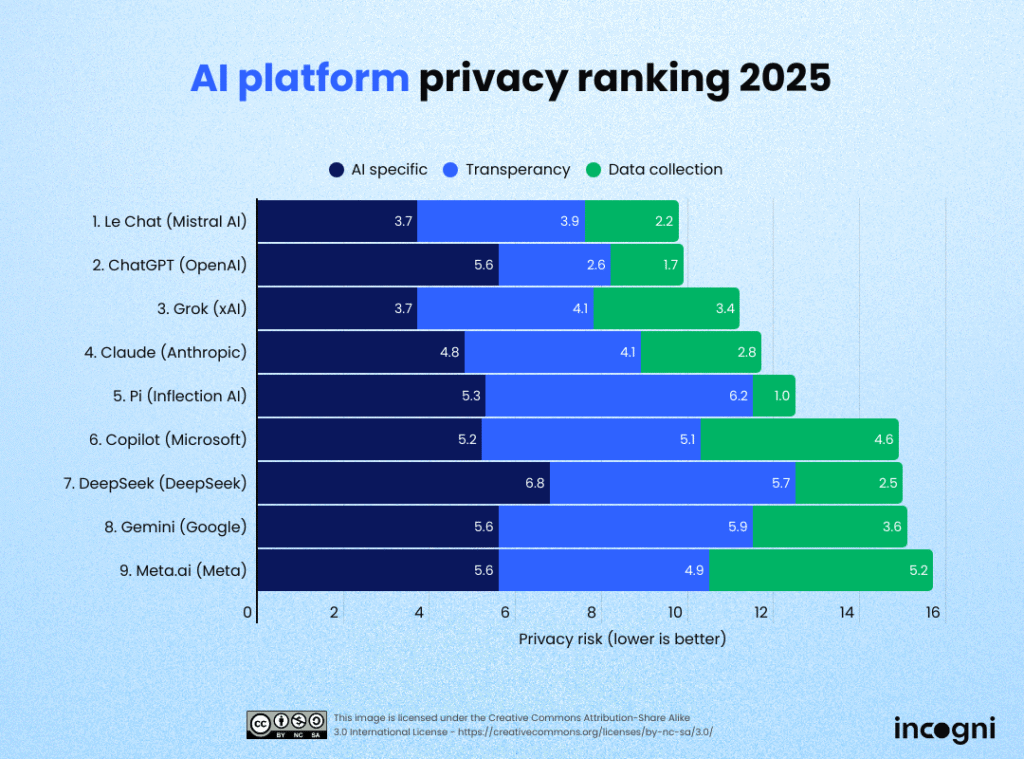

- Secure but not encrypted

Most privacy friendly of traditional AI platforms in Incogni’s 2025 LLM privacy ranking (especially when opting out of data sharing) - High-end Capabilities

Has it all: Agents, Memory, Web search with citations, Academic Deep Research, Code Interpreter, Image Generation, Voice mode (Voxtral), Canvas editing, Connectors to Email, Calendar, etc. - Minimal data collection

Just remember to opt-out in Settings –> Privacy

Chat data is, of course, still sent to them. - EU-based

As above, a high-privacy jurisdiction is good even for US-based actors.

Limited Free Service, Pro 17,99€/m

Other online AI platforms

Other AI Platforms came close to Mistral in Incogni’s AI platform privacy ranking (click below for full report), but I believe Mistral is the only one situated in a high-privacy jurisdiction (Not US or China). Their privacy-level is also very high with the correct, easily configurable privacy settings.

Local AI—Gold-standard privacy

(it’s not just for professionals)

Running Local AI warrants a full blog-post on its own, but as it allows AI use without sending any data to anyone, a short mention is warranted.

Using an easy-to-install applications, you can run an AI on your own computer without much technical expertise. It is slower and less impressive in capabilities in comparison to online services, but it is completely free to use, good enough for many use-cases and the gold-standard in privacy.

Some hardware is required.

You need to fit the size of the LLM you run with your local hardware. For example, Jan has a quick button for setting up a local model, and will assist you in selecting something compatible with your hardware. As a rule-of-thumb, bigger numbers (4B, 20B, etc.) mean bigger models and bigger hardware requirements. Running more impressive models requires more memory, preferably combined with a gaming GPU.

Other good applications that require a little more technical expertise are LM Studio and Ollama.

Honorable mention for nerds:

OpenRouter’s Zero-Data-Retention API

For developers comfortable with API calls, OpenRouter provides Zero-Retention API access to most current LLMs. This allows for high privacy utilizing high-end services in your own code or a local client program, such as the previously mentioned Jan or LM Studio.

By setting an account-wide flag or a per-request parameter, you can ensure that none of your AI usage is saved for any length of time, as API calls are only routed to model enpoints with a verified ZDR policy.

Conclusion

Middlemen like Duck.ai or Kagi Assistant can try to limit data sharing through contractual means, but they still physically transfer data to external companies. These companies can have data practices that override contractual promises, such as OpenAI’s current court order to preserve all user logs and chat content. Middlemen help more with profiling prevention than data leaks.

Mistral seems to have the best privacy policies of the ‘regular’ AI platforms, though you need to trust the usual wall-of-legalese that is their privacy policy.

“AIs from Big Tech are built on harvesting your data. But Proton is different. We keep no logs of what you ask, or what I reply. Your chats can’t be seen, shared, or used to profile you.”

– Lumo

Technically, the only truly private service on the list is Lumo, with its unique zero access encryption (everybody encrypts in-transit). With other providers, you need to have faith in their data sharing contracts and policies.

For truly private chats, Lumo is as close as one can get without running AI on your own hardware.

- It’s good to note, that services like WhatsApp can claim to be end-to-end encrypted (E2EE) and still copy some of your data (often at least the metadata), as they are still able to read your data at rest, and E2EE only secures transfers. Message content can legitimately be read for user reports, court mandates, etc. ↩︎